We support leading insurance companies in their digital transformation, helping them adapt to an increasingly dynamic and demanding market.

Keys to understanding hyperconverged infrastructure

Hyperconverged Infrastructure (HCI) is an architectural approach that integrates in a single system or platform the compute, storage and networking resources necessary for the operation of applications and workloads. Instead of using separate hardware components, such as servers, storage systems, and network switches, a hyperconverged infrastructure combines these elements into a set of highly integrated servers.

The management of a hyperconverged infrastructure is done through software. A centralized management software layer is used to provide a unified view of all resources and allows them to be managed efficiently. This software layer can also include features such as virtualization, data replication, data deduplication and compression, virtual machine migration, among others.

Many IT managers recommend implementing it in their companies for all the benefits it brings. However, there is also some confusion about this solution. It is normal for questions to arise, such as: how is it different from traditional infrastructure? Is it worth implementing in my company? Here are some of the key concepts to understanding this system.

In the IT world, the term infrastructure is used to refer to the set of hardware and software components that serve as the basis for the software that companies use to develop their business.

When we talk about hyperconverged infrastructure, the term “infrastructure” refers to the typical minimum components needed to run an application. For example, for a browser to work, the computer must have a processor, memory, and enough storage. In a data processing center, we have the same components on a much larger scale.

Understanding “Traditional” Infrastructure

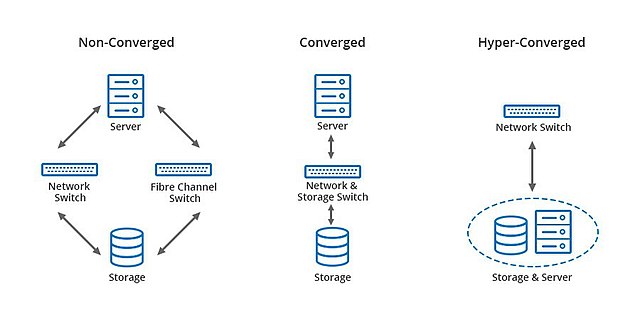

In "traditional" infrastructure, the compute or processor and memory are provided by physical servers, the storage by specific storage components (SAN arrays) and, additionally, a component (SAN switch) is needed to connect the storage with the servers and allow the data flow; this occurs when we have two or more physical servers, which also need to access a shared storage. The term SAN comes from storage terminology, where there are other types such as NAS and DAS.

Thus, we have three main components: servers, SAN switches and SAN array, which provide a basic infrastructure unit to run applications. In order to properly administer and manage these components, we must have very specific knowledge of all their technologies, as well as specific management tools for each of them. For this, we will need technical personnel with knowledge that covers all the products of our "traditional solution", which makes the day-to-day life of our infrastructure more expensive, both for the number of technicians we need and the training and updating needs they require.

Another important aspect to take into account in traditional infrastructures are the single points of failure, which forces us to redundant physical components and add software, which further increases the complexity of managing the environment, in addition to the extra cost of these additions.

Source: Profesional Review

Source: Profesional Review

Origin of hyperconverged infrastructure

To alleviate this situation, converged infrastructures appeared first, where the same manufacturer proposed the same as in the previous option but in a unified and centralized manner, simplifying the administration, management and operation of the infrastructure, while maintaining all the physical components of a traditional solution.

However, this system of separate components could no longer meet the current needs of large cloud environments in terms of simplicity of management and cost efficiency. Thus, in 2012 hyperconverged infrastructures or “HCI” (Hyper Converged Infrastructure) emerged.

If a physical server can carry storage (DAS), why not bring together through software the storage of several servers to form a single storage entity and offer it in a comprehensive and unified way as a SAN array?

Precisely this is hyperconvergence, joining several physical servers that already provided computing and memory, now providing storage, and adding a software layer that presents all the disks of all the servers as a single storage entity.

Before hyperconvergence, and because many CPDs contained multiple storage systems, Software Defined Storage (SDS)were developed, where software running on a central storage node could group several physical arrays into a logical entity. But the reality is that it did not simplify the infrastructure of a DPC, but added even more complexity, and cost.

Another element that has favored hyperconvergence is the fact that very high-speed (10 GB or more) network (Ethernet) connections have become popular and cheap enough, allowing data movements (bits) between the disks of a hyperconverged system to be fast enough to compete with a traditional SAN system and improve its performance.

Managing hyperconverged storage

With hyperconverged systems we have eliminated two components that introduced complexity and cost: SAN switches and SAN arrays. However, a new component appears to be managed: hyperconverged storage.

By relying entirely on servers that provide the necessary drives, some manufacturers have developed a management system to include both servers and storage, and even the server virtualization layer as is the case with Nutanix with its AOS hyperconverged software; while others have added the hyperconverged layer to their server virtualization system in an integrated way, such as VMware with its vSAN hyperconverged software.

Benefits of Hyperconverged Infrastructure

- Easy to deploy: HCI systems have included "Wizards" to deploy a complete solution in just hours. A very basic initial configuration of each of the servers that will form the HCI unit is performed and then the wizard undertakes the necessary steps on each of the servers to deploy the complete HCI solution. The same applies to extensions.

- Simplified management: Being a software-defined solution, tasks that were previously complex and complicated have been encompassed in simple tasks, and in turn the environment has been prepared to automate administration and operation tasks from the management console. In addition, they also incorporate APIs to integrate and manage the HCI infrastructure from a global IT asset management portal.

- Always up to date without service disruption: Although it depends on the manufacturer of the HCI solution, the necessary updates throughout the system are regularly scheduled, from the BIOS of the physical servers to a new functionality, or a patch for a problem, in a very simple way, always taking into account that the service they are providing should not be stopped, and with the premise of "a single click" to make the updates.

- Scalability: it is done through "scale-out" or horizontal scaling, simply adding more servers and incorporating them into the HCIin set and with the facilities included in the management console. These servers will add more computing power and storage to our HCI infrastructure.

- Availability of the environment: HCI environments have been designed to be tolerant of failures in the components that make up the infrastructure, both server disks and complete servers. At a minimum, a full server crash is withstood without service disruption, and without the need to configure anything in high availability.

- Disaster Recovery: Top HCI vendors have natively incorporated capabilities that allow us to design a disaster recovery solution for the primary CPD. This storage virtualization allows to have any type of data from the HCI environment replicated in another HCI environment and ready to go into service in case of need.

- Speed in disk access: CWith a well-configured HCI system, we practically forget about the problems of IOPs (transferring data from a storage system to the program that needs that data) that we used to have with traditional arrays. Being a software-based system, techniques introduced (caching in RAM, tiering with SSDs, positioning the data near the source that uses them, etc.) have eliminated traditional bottlenecks.

- Cost reduction: These types of platforms allow for reduced costs thanks to the advantages explained above. Whether it's time, staff and infrastructure. In fact, this reduction not only means that it is cheaper than a traditional solution, but also offers us new possibilities at a lower cost, such as replication between DPCs.

![]()

We hope to have clarified any possible doubts about this system. However, should you have any additional questions or are considering implementing a hyperconverged infrastructure system in your company, at Serban Group together with Nutanix we offer you this demo where you can experience the Nutanix software, launch an HCI platform and see for yourself all the benefits it can offer.